How Latency Works

- Live Versions: All

- Operating System: All

Note: The following article is purely informational. For more specific troubleshooting advice, please take a look at our article on How to reduce Latency.

- What Latency Is

- What Causes Latency

- Where Latency Occurs

- How Audio Interface Latency is Calculated

- Settings in Live that affect latency

- Device settings that affect latency

- Other factors that cause latency

- Use Cases

- How Live adapts to latency

- Preparing your system for sample-accurate recording

What Latency Is

Latency refers to a short period of delay between when a signal enters a system and when it emerges from it. In a Digital Audio Workstation, some amount of latency is always necessary to allow audio data to be captured by your audio interface, passed to your DAW (or other application), processed by instruments/effects within the DAW, and then passed back out to the output of the audio interface.

Latency cannot be avoided, but it can be understood!

What Causes Latency

In digital audio, latency is introduced by signal conversion and audio buffers within the signal path.

An Analog-to-Digital (AD) signal converter transforms incoming audio into data that the computer can process. Conversely, Digital-to-Analog (DA) Converters convert data into an analog audio signal that is sent to the speakers or audio effects. In most studios, this task is handled by an audio interface.

An audio buffer is a region of physical memory storage used to temporarily store chunks of data as the signal travels from one location to the next. (Larger buffer sizes take longer to process, which increases the overall latency).

Where Latency Occurs

- The audio interface

- The audio interface driver

- The operating system (which may require additional time to process and mix streams from other applications before passing it out to the speakers)

- Between the Audio Interface and the DAW

- In the DAW’s signal processing (e.g., monitored tracks, native effects, plug-ins, etc.)

How Audio Interface Latency is Calculated

For a signal to move from one point in the chain to the next, at least one audio buffer must be fully processed. As such, the minimum latency is equivalent to the time required for a single audio buffer to be processed within a given rate of samples per second.

Or, in more practical terms...

Buffer Size (number of samples) ÷ Sample Rate (kHz) = Expected Latency (ms).

For example, while running with a Buffer Size of 256 samples and a Sample Rate of 44.1 kHz, an audio interface will convert the incoming signal with 5.8 milliseconds of expected latency before sending it into Live (256 samples ÷ 44.1 kHz).

You may have noticed that the "Overall Latency" shown in Live's Preferences does not match the formula above. While this may seem counterintuitive, it is for a good reason:

- The formula above applies to a single stage of signal conversion. However, the Overall Latency of an interface includes input and output latency. An easy way to calculate this is to multiply the value above by two.

- This is less predictable in the world of Digital Signal Processing (DSP), where additional layers of processing/conversion affect the overall latency by varying amounts. For several examples, please refer to the Use Cases section of this document.

- Some audio interfaces report inaccurate latency values.

Settings in Live that affect latency

Delay Compensation

Reduced Latency When Monitoring

Buffer Size

As mentioned, audio is processed in chunks of individual samples. Processing in larger chunks can result in audible latency, whereas smaller chunks generally create a lower global latency value. However, smaller chunks of samples are loaded/unloaded much faster to maintain consistent playback, which creates a higher CPU load on the system. If the CPU cannot keep up, the missing chunks can be heard as crackles or dropouts.

Sample Rate

In digital recording, audio is represented by a series of data points called “samples.” These samples are strung together in rapid succession by the computer, which results in an audible waveform. The audio sample rate determines the frequency at which these samples are recorded, generated, and output by the system. (Higher sample rates will result in slightly lower global latency but create more demand on the CPU).

Track Delays

Because signals cannot be processed before they ever happen, negative delay values do not actually move the track’s signal ahead in time. In reality, every other track within the session is delayed by that amount to achieve the same effect.

The output of any track can be delayed or pre-delaying in milliseconds to compensate for human, acoustic, hardware, and other real-world delays (or for creative purposes). However, the amount of delay applied to a track’s output will be interpreted as latency when the signal is monitored in real-time.

Graphics

Graphics are not latency-compensated in Live to reserve computing resources for the audio stream. This can sometimes result in a noticeable lag in Live’s graphics in Live Sets with an extreme amount of internal latency but it is imperceptible in most other cases.

Editing in Max for Live

Max for Live devices will introduce additional latency when their editor window is open.

Device settings that affect latency

Note: With the exception of the first topic, the points below apply to both Live’s native devices and compatible third-party plug-ins.

Related: How to view the latency of a plugin or Live device

Plug-In Delay Compensation

If the CPU load of a plug-in is low enough for the processor to calculate all of its functions within a single audio buffer, it will not create any additional latency. If not, the plug-in will report the time discrepancy to the DAW, which delays the output of the entire signal path by the same amount to maintain consistent playback.

Some plug-ins can cause a significant amount of latency due to the amount of processing required for high-quality output. This can be frustrating when monitoring signals in larger sessions since a few high-CPU devices can significantly delay the audio output for the entire session.

External Instrument & External Audio Effect

As these devices send and receive audio from outside Live, they will delay the audio by the Overall Latency amount in preferences. In addition, setting the 'Hardware Latency' slider to any amount other than zero will increase the global latency.

Lookahead

Some devices include a "lookahead" feature, which applies a slight delay on the incoming signals. (eg. - Live’s Compressor uses Lookahead to apply dynamic changes that would be impossible to create in real-time).

Spectral Analysis/Processing

A relatively large audio buffer is needed to convert a signal from the time domain to the frequency domain for processing or display. As a result, devices that do any spectral processing will likely create some latency.

Other advanced processing options (Oversampling / Convolution / etc.)

Some devices require huge sample buffers to make intelligent decisions about how to behave, not just based on a single instant in time (one sample) but on a period of time (a larger block of samples). This is especially true for mastering plugins such as iZotope’s Ozone, which introduces a significant (but necessary!) amount of latency to perform its operations.

Other factors that cause latency

Inefficient/outdated audio interface drivers

On Windows machines, ASIO drivers generally perform better than MME/DirectX.

Also, outdated drivers can slow things down due to influence from outside factors, such as operating system updates or changes made to the DAW. Manufacturers often release firmware updates to compensate for these changes or refine the device’s performance.

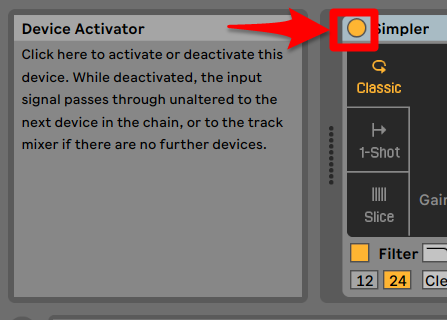

Deactivated Instruments & Effects

The yellow button in the top-left corner of all instruments and effects is used to deactivate the device. Since this parameter can be freely automated/modulated by the user, eliminating the device’s latency every time the button is toggled would create noticeable distortions to the output. Device latency is always in effect to prevent this, even when turned off.

Video

Video is not latency compensated in Live.

Use Cases

Understanding latency can be difficult since the symptoms often differ from their root cause. Below are a few examples that illustrate this.

Note: The latency times shown below are simplified for demonstration purposes. Actual results may vary.

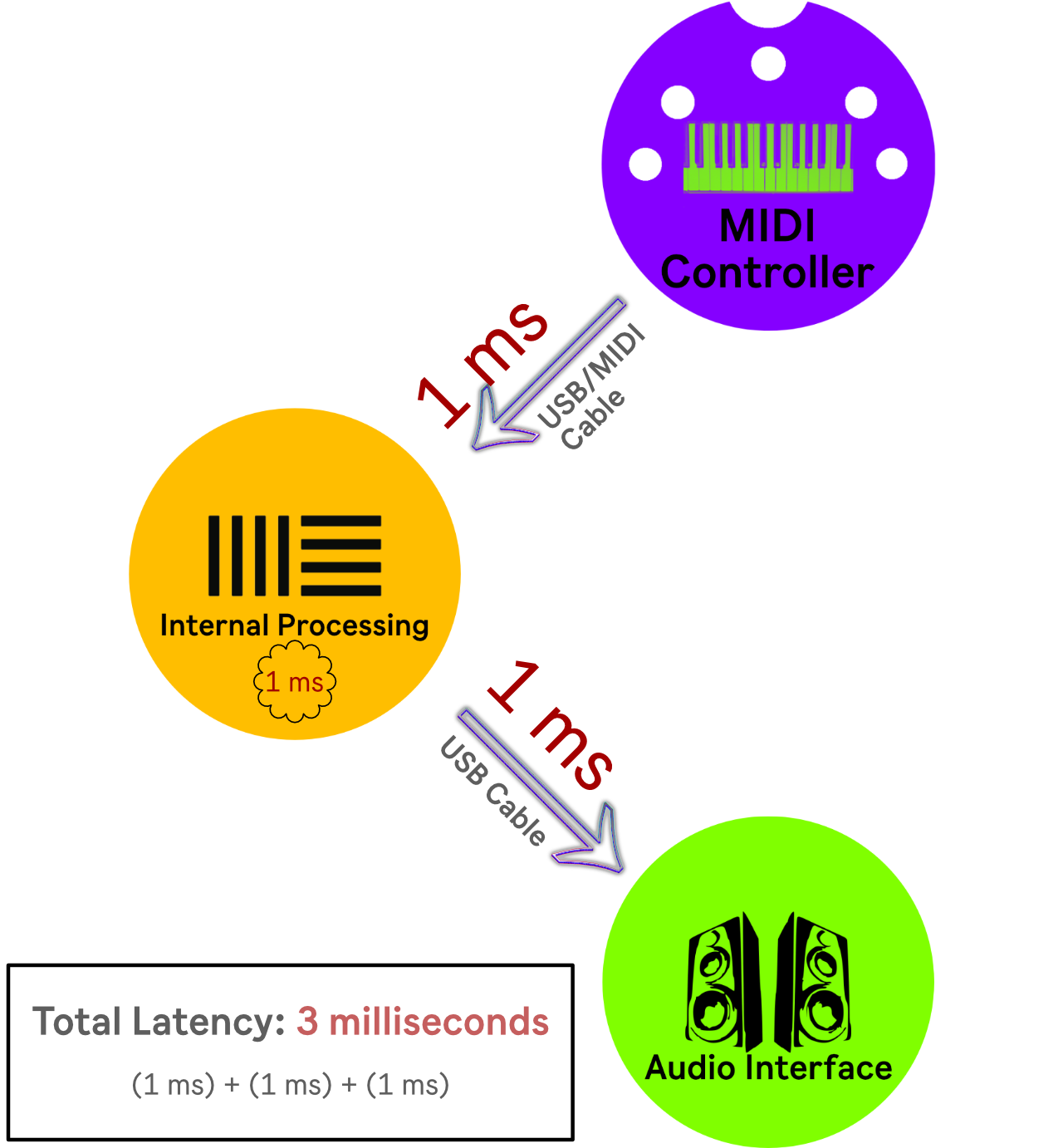

Scenario 1 - MIDI Latency

In this scenario, the user experiences a latency between pressing a note on their MIDI controller and hearing the resulting audio output.

- It takes 1 ms for the signal to go from the keyboard output to the computer’s input.

- Once the MIDI signal is in the computer, it takes 1 ms for it to be processed internally by the DAW (which converts it into audio).

- The resulting audio signal takes 1 ms to travel out of the DAW, back to the audio interface, and to the speakers.

Since the signal travels in a linear path, there is no way for each part of the process to occur simultaneously, and the user experiences an overall latency of 3 milliseconds.

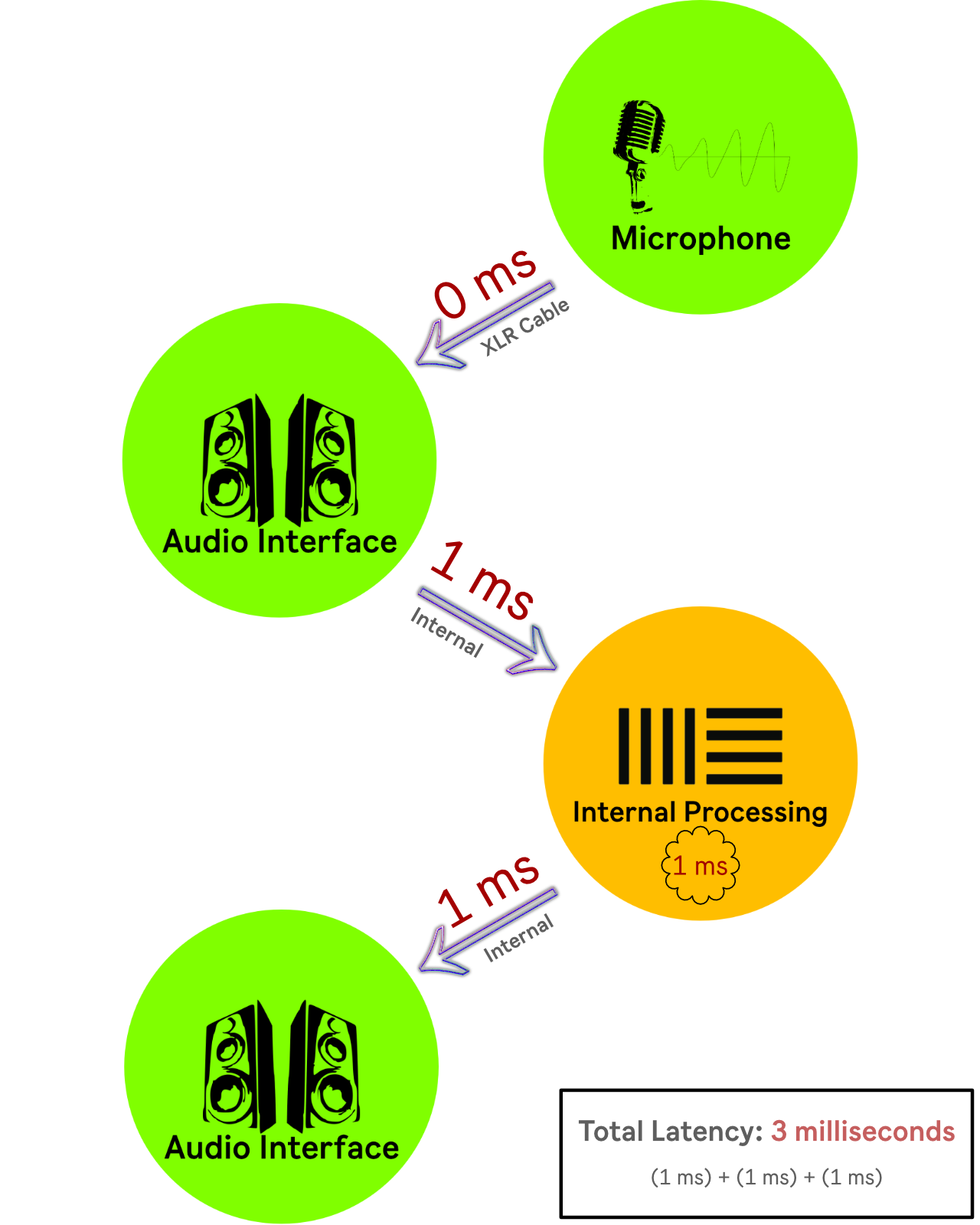

Scenario 2 - Audio Latency

In this scenario, the user experiences a latency between speaking into a microphone and hearing the resulting audio output.

- It takes 0 ms for the signal to go from the microphone to the audio interface's input (since analog signals are almost instantaneous).

- The audio interface takes 1 ms to convert the signal into a digital format and pass it to the computer.

- Once inside the computer, the signal takes 1 ms for it to be processed internally by the DAW.

- The resulting audio signal takes 1 ms to travel out of the DAW, through the computer, to the audio interface, and (finally) to the speakers.

Since the signal is traveling in a linear path, there is no way for each part of the process to occur simultaneously, and the user experiences an overall latency of 3 milliseconds.

How Live adapts to latency

Live establishes the amount of latency caused by each component within the system to track the global latency value for the session.

When Delay Compensation is enabled, this value is determined by whichever signal path causes the highest amount of latency, so Live can delay each signal path's output by the necessary amount of time to match it. As a result, the audio played through the Master track is identical to that of a latency-free configuration. (With Delay Compensation disabled, however, the global latency value is ignored, and each point of monitoring contains an amount of latency unique to the signal path behind it).

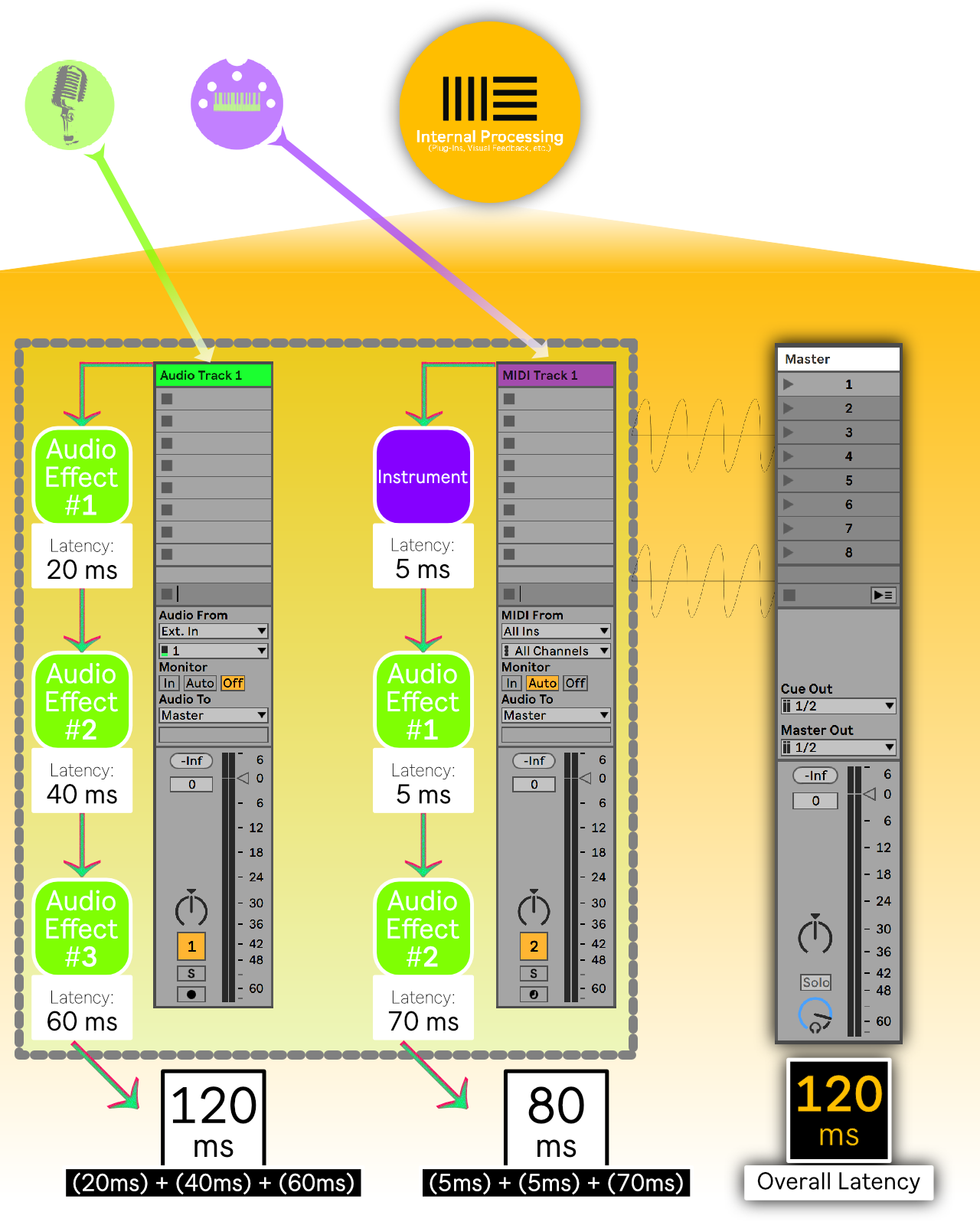

Take, for example, the following Live Set with two tracks...

“Audio Track 1” contains several devices that create an overall latency of 120ms, and “MIDI Track 1” has a latency of 80ms. With Delay Compensation active, both tracks are delayed by a total of 120ms, so even the track with the highest amount of latency can keep up with the rate of playback.

However, "MIDI Track 1" contains a software instrument. To play it, you need to set the monitor to In or Auto and arm the track for recording. Since the track is being delayed by 120ms (so it plays in time with the rest of the tracks), it will feel quite sluggish when playing.

With Reduced Latency When Monitoring is turned on, however, the overall latency of the set is bypassed for monitored tracks. So when Track 2 is monitored, it will only contain the 80ms of latency caused by the devices directly within its signal path. This can be helpful in situations where the user wants to record new material into an otherwise latency-heavy session but still wants the rest of the tracks to play with Delay Compensation enabled.

Preparing your system for sample-accurate recording

When recording a new audio track into Live, it can often help to set the track’s monitor setting to “Off” and monitor the instrument signal directly while recording (bypassing Live).

This presents a challenge, however, since the resulting recording would sound slightly off-time due to the latency added by the system at the time of recording. To adjust for this timing discrepancy, Live automatically offsets the recording by whatever amount of latency is reported from the audio interface. Ideally, this results in a recording that is perfectly in time with the signal that the performer heard when recording it.

However, some interfaces report this latency value inaccurately, and Driver Error Compensation allows Live to adjust for these incorrect values. Live even has a built-in lesson that includes a specially calibrated Set that allows you to set DEC. For the lesson, you will need a cable and an audio interface with at least one physical input and output. This can be found in the help view. (Help → Help View → Audio I/O → Page 18 of the Lesson, click the link for Driver Error Compensation).

Note: While Driver Error Compensation will have an effect on the placement of the recorded material, it has no effect on real-time latency.